Gateway Server for Data Factory

How to start a self-hosted ADF runtime when you need it and pause it when you don't?

Self-hosted integration runtimes are Azure Data Factory's way of connecting cloud systems (like ADF itself or Azure Blob Storage or your favorite data cloud) with shielded systems behind a firewall, e.g. on-premise databases or legacy systems only allowing connections via ODBC (Abacus 😎) or SFTP servers with IP restrictions.

Let's assume here, you already have an Azure virtual network up and running, which means, a VM inside your network is not an issue. If you don't, that will be the major obstacle to set up, not the gateway itself 😅

Installing the ADF self-hosted integration runtime on an Azure VM is also not the thing I want to talk about here, that is easy enough. My topic is really: How to start the VM when you need it and pause it when you don't?

The straight forward approach would be to create an ADF pipeline triggered at some time in the morning/evening to toggle the VM. However, the VM would still sit around most of the day doing nothing. Instead, let's start it via ADF just before an activity needs it, and stop it again right afterwards.

To start it, you'll need a web-activity POSTing an empty body to:

https://management.azure.com/subscriptions/[subscription id of the VM]/resourceGroups/[resource group name of the VM]/providers/Microsoft.Compute/virtualMachines/[name of the VM]/Start?api-version=2020-12-01

Ideally, your ADF already has a system assigned managed identity in your Entra (f.k.a. Azure Active Directory) and has the role "Desktop Virtualization Power On Off Contributor" on the VM (to be able to switch it on or off, obviously).

Before unnecessarily sending the start-request to the VM, you might want to check if it's running already. To do so, GET it's status before and condition the start-request on it's output:

https://management.azure.com/subscriptions/[subscription id of the VM]/resourceGroups/[resource group name of the VM]/providers/Microsoft.Compute/virtualMachines/[name of the VM]/InstanceView?api-version=2020-12-01

This activity's result will be in

@activity('[name of the check-status-activity]').output.statuses[1].displayStatus

To ensure the VM (and the self-hosted integration runtime on it) is really up and running, you might want to give it a little time after the start-request is done, maybe even check it's status again. This could be done with an until-activity like this:

The same way, after the actual pipeline utilizing the VM is done, it can be stopped (or, as Azure calls it, deallocated) again with a POST request to

https://management.azure.com/subscriptions/[subscription id of the VM]/resourceGroups/[resource group name of the VM]/providers/Microsoft.Compute/virtualMachines/[name of the VM]/Deallocate?api-version=2020-12-01

With the VM deallocated, it doesn't cost anything anymore. And that is, after all, the idea behind this article 🤓

Still, one thing is left to discuss: What if two pipelines use the VM in parallel? If the first of those is done, it might stop the VM and therefore interrupt the second. How do we prevent this from happening?

Since you might be using multiple pipeline tools (a.k.a. ETL/ELT, so besides ADF maybe Power Automate or Fivetran or Portable or ...), it might be difficult to scan for relevant current pipeline runs (only those utilizing the VM) in all of them. The trick is to register each run utilizing the VM in an independent log. One possibility here would be a table in Azure Table Storage as it usually is easy to read and write there (with whatever pipeline tool you use), it's scalable and very cheap. Alternatively one could use a SharePoint list or Google Sheet. Basically any (as cheap as possible) table accessible to ADF (et al) will do:

Before ADF then stops the VM, it can check if anything on the list indicates there is still a pipeline running and utilizing the VM:

If this activity returns any row, there is still some pipeline utilizing the VM. Hence, the stop-activity (cf. above) is conditioned both on the current status of the VM and on the result of this check-log-activity:

@and(equals(activity('[name of the check-status-activity]').output.statuses[1].displayStatus, 'VM running'), not(contains(activity('[name of the check-log-activity]').output, 'firstRow')))

There is (of course) one downside to this approach: Each pipeline utilizing the VM needs to "register" itself in the log first (to prevent the VM from getting stopped by something else) and then update its entry to the log once it is done (to not prevent the VM from getting stopped once no pipeline needs it anymore).

However, it's not too hard to add this to a pipeline. In ADF, the most easy way to add/update something in an Azure Table Storage table is a copy activity (rather than a web activity, due to the managed authentication). A copy activity, of course, needs a source and a sink. The sink is the log table, but what is the source, if all we want to achieve is to write the current pipeline's name, run ID, a status and maybe some timestamps to the log?

The copy activity can add some columns to the source, but something must be the source. A simple CSV file on Azure Blob Storage containing something (e.g. the pipeline status) will do the trick:

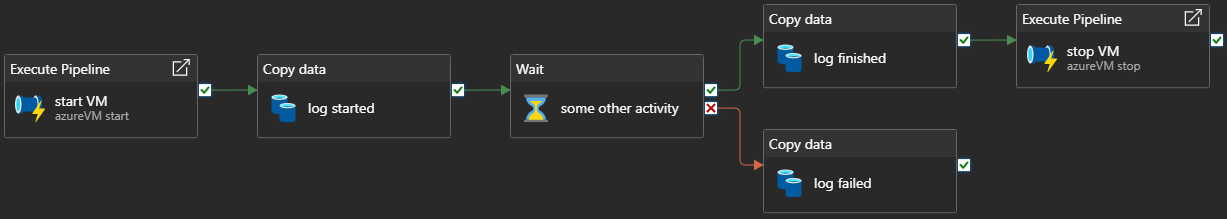

Ultimately, we have a pipeline to ...

- Start the VM (if it's not already running)

- Register itself in a log table

- Run some pipeline activity via a self-hosted integration runtime on the freshly started VM

- Update the log table

- Stop the VM (if not prevented by any other entry in the log)

Tadaa, a dynamic self-hosted integration runtime with optimized cost 🥳